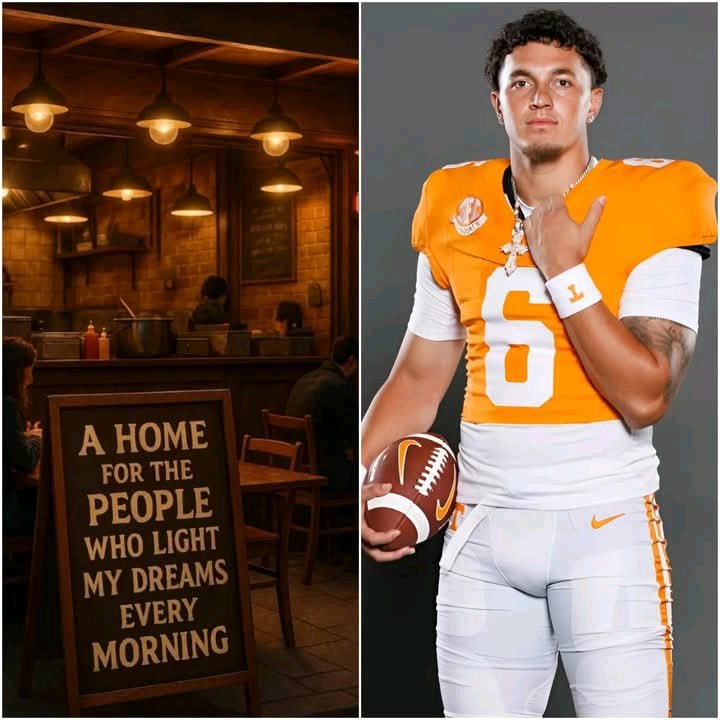

One of the best receivers in his class to be recruited from California is the 5-foot-10, 170-pound junior at Folsom. Additionally, he lets his play on the field speak for itself, despite his love of conversation, interaction, and overall social interaction.

SAN FRANCISCO, CA- The artificial intelligence (AI) landscape is undergoing a period of unprecedented growth, fueled by advancements in generative models, rapid deployment across diverse industries, and a surge of investment capital. However, this explosive expansion is raising serious concerns among policymakers and industry leaders, leading to a complex and evolving regulatory landscape.

At the forefront of the debate is the power and potential dangers of AI. Generative AI, capable of creating realistic text, images, and even video, has demonstrated remarkable abilities, from automating complex tasks to assisting in scientific discovery. This has ignited a global race for AI dominance, with tech giants like Google, Microsoft, and DeepAI pouring billions into research and development.

However, this rapid advancement is not without its challenges. The ease with which AI can generate realistic yet fabricated content raises concerns about the spread of misinformation and disinformation. Deepfakes, increasingly sophisticated synthetic media, pose a threat to individuals and institutions alike, raising questions about trust, authenticity, and the integrity of information.

Furthermore, the potential for AI to perpetuate and even amplify existing biases is a significant concern. AI models are trained on vast datasets, and if those datasets reflect societal biases, the resulting AI systems can inadvertently discriminate against certain groups. This can lead to unfair outcomes in areas like hiring, loan applications, and even criminal justice.

“We are at a critical juncture,” stated Dr. Anya Sharma, a leading AI ethicist at Stanford University. “The incredible potential of AI is undeniable, but we must address the ethical and societal implications proactively. Allowing AI to evolve unchecked would be a grave mistake.”

The regulatory response to this fast-moving technological frontier is fragmented and evolving. The European Union is leading the charge with its proposed AI Act, a comprehensive piece of legislation aimed at establishing stringent rules for the development and deployment of AI systems. The Act proposes a risk-based approach, categorizing AI applications based on their potential impact and imposing varying levels of scrutiny. High-risk AI systems, such as those used in law enforcement or healthcare, would face the most rigorous requirements, including transparency, accountability, and human oversight.

In the United States, the regulatory landscape is more complex. While there is no single federal law specifically governing AI, various agencies are taking action within their existing mandates. The Federal Trade Commission (FTC) is focused on consumer protection, investigating potential instances of deceptive AI practices. The Equal Employment Opportunity Commission (EEOC) is working to ensure that AI does not lead to discriminatory hiring practices. Additionally, several states, including California and Illinois, are exploring or enacting their own AI-related legislation.

The debate over AI regulation is often contentious. Tech companies argue that overly restrictive regulations could stifle innovation and hinder the development of beneficial AI applications. They advocate for a more flexible and self-regulatory approach, emphasizing the importance of industry collaboration and the development of ethical guidelines.

However, critics argue that self-regulation alone is insufficient, pointing to the rapid pace of technological advancement and the potential for companies to prioritize profits over safety and ethical considerations. They advocate for strong government oversight, independent audits, and robust enforcement mechanisms to ensure that AI is developed and deployed responsibly.

As the debate continues, several key questions remain unanswered. What level of transparency is necessary to ensure that AI systems are understood and trusted? How can we mitigate the risks of bias and discrimination? Who is ultimately responsible when an AI system causes harm?

The answers to these questions will shape the future of AI and its impact on society. Governments, industry, and academia must work together to navigate this complex landscape and harness the transformative potential of AI while mitigating its risks. The coming months and years will be crucial in determining the balance between innovation and regulation, and ensuring that AI serves the best

interests of humanity.

Post Comment